New AI tools are a hot topic in the world of marketing right now. What can they do? What can’t they do? And what do you need to watch out for?

This is part one of a two-part series that looks at AI and its possible uses for insurance marketing. Will AI change everything about marketing and SEO, as many predict? Or are you better off sticking with your tried-and-true tools? First, we’ll take a look at what it is, where it’s used, and some of the pitfalls we all need to be aware of. In a follow-up post, we’ll dive into what it can actually help you with in your business.

In this post, we’ll cover:

What Is AI? And What Does It Actually Do?

5 AI Products & Tools You Probably Already Use

4 Next-Gen Uses for AI

AI Tools: Potential Pitfalls & Considerations

AI Content: Potential Pitfalls & Considerations

No time to read? Watch our video overview:

What Is AI? And What Does It Actually Do?

First things first – what are we talking about when we say “AI”?

Let’s get a little meta and ask ChatGPT, an AI-powered chatbot, for an easy-to-understand definition of AI:

If you'd rather have a human-crafted explanation, try this on for size:

AI is the abbreviation for “artificial intelligence.” It refers to a computer program or algorithm that helps a machine complete a task that would otherwise require a human brain. These tasks include solving problems, writing code, creating artwork, writing content, and more.

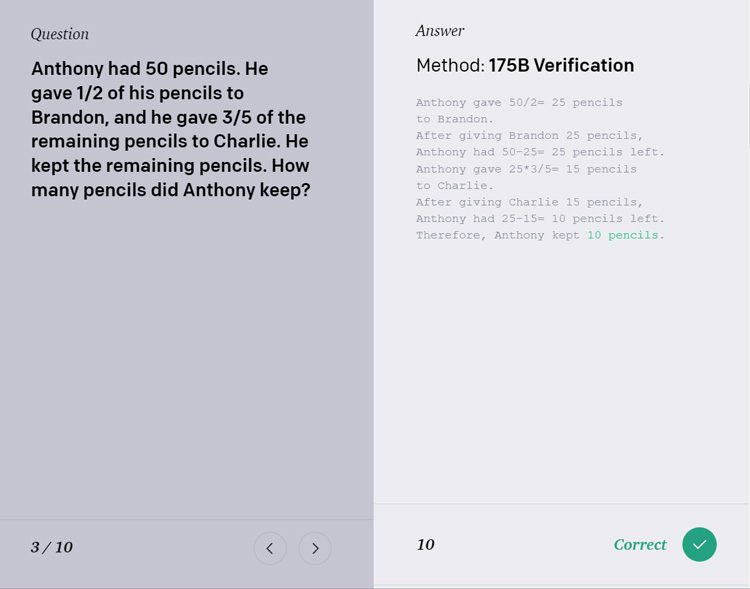

This is a big deal right now because of recent advances in the kinds of tasks AI can complete. As an example, calculators have been able to help with math for decades. You give it the commands (10 x 4) and it gives you the answer (40). But imagine a calculator than can solve an entire word problem, like this:

So how does AI learn to do what it does?

Current AI models have been trained on enormous amounts of public domain books, public datasets, internet content (including images and video), and more. Based on what “reads,” the AI can then use what it “knows” to learn even more from new data it’s provided with.

This process is similar to the way humans learn a new language. You start small, with vocabulary words and short sentences. Over time, as you learn more words and how to put them together, you can speak longer sentences, have conversations, and eventually read and write in the new language.

AI works the same way. The more material it’s trained on, the better it knows how to understand new material and take on more complicated tasks.

That’s what brings us to the current explosion of AI-driven products and tools. Many of these use AI technology from a company called OpenAI. Other AI producers include DeepMind and Doc.ai. These companies create algorithms and AI tools that can be deployed as the “brain” behind apps, games, e-commerce sites, and more.

5 AI Products & Tools You Probably Already Use

Although the internet has been buzzing with news about AI recently, most of us have been using some form of AI for years already – without even knowing it. Here are some examples:

- Digital assistants. If you use Siri, Alexa, or Google Home, you’re using a tool powered in part by AI. The more you use these programs, the better they get at learning your preferences and understanding your requests. That’s due to an AI running behind the scenes, which is learning based on data collected from its experiences with you and other users.

- Smart devices in your home. If you have a Roomba, a smart refrigerator, or smart thermostat, your device uses AI. For example, the Roomba j7+ was trained using a large database of images of fake pet messes to help it avoid the real thing.

- Facial recognition. If you use your face to unlock your phone, your phone uses AI to compare the saved image of your face with the new image to make sure it’s you.

- Social media & streaming platforms. How do these platforms know what to suggest you see or read next? The algorithms these platforms use are a form of AI, which takes your personal preferences into consideration to make suggestions.

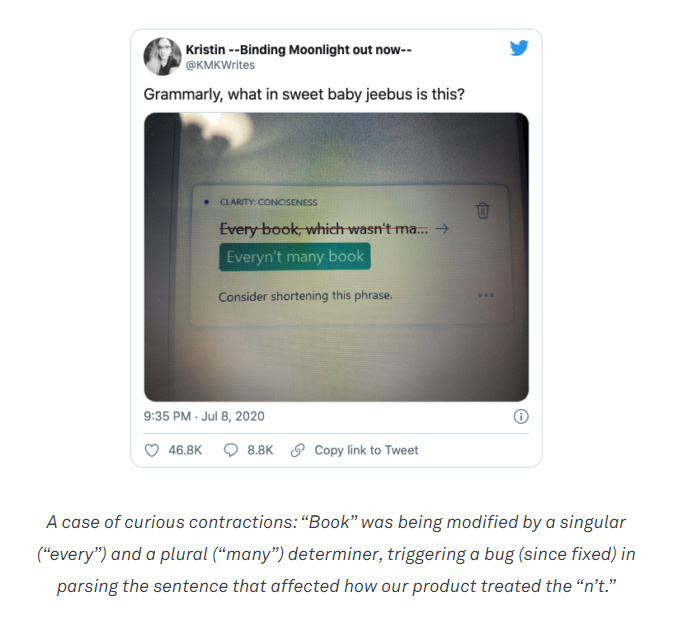

- Grammar checking. If you’ve used Grammarly, you’ve used a tool powered by AI to suggest corrections and improvements to your text. The app uses AI to spot common problems and offer potential solutions. The programmers even use the tool’s mistakes to improve its machine learning capabilities, as shown below in a screenshot where they explain a nonsensical suggestion.

4 Next-Gen Uses for AI

Thanks to advances in how AI understands and uses language, we have a new generation of products and tools that can handle complex tasks like creating code, art, video, and text-based content for you.

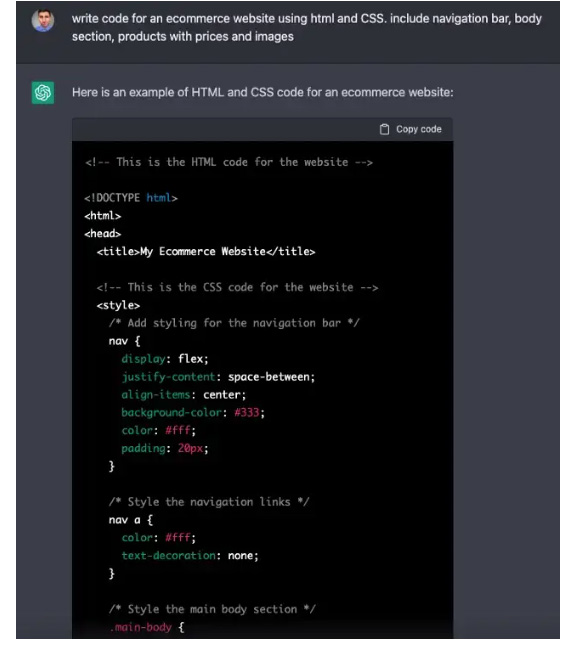

- Write code. We’ve had no-code tools for years now, but AI tools like Microsoft’s Copilot and ChatGPT can generate code based on a text prompt. For example, you can give ChatGPT a basic text prompt like this coder did (“write code for an ecommerce website using html and CSS…”) and you’ll get exactly what you asked for:

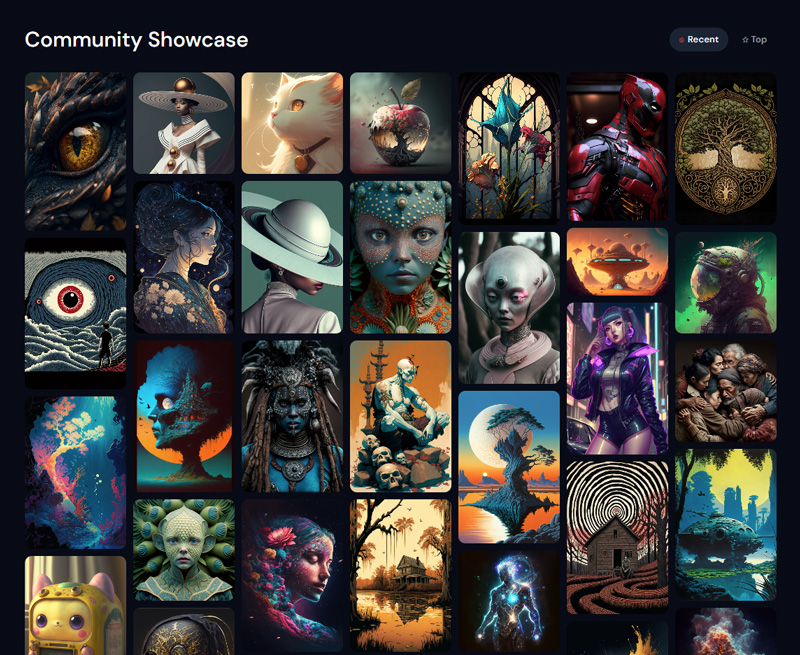

- Create images. AI tools like Dall-E 2 and Midjourney can create original artwork based on your text prompt. More mainstream tools like Canva and Microsoft Image Creator now help you create original images and artwork based on prompts for personal and business uses. Here’s just a sample of what people are using Midjourney to create:

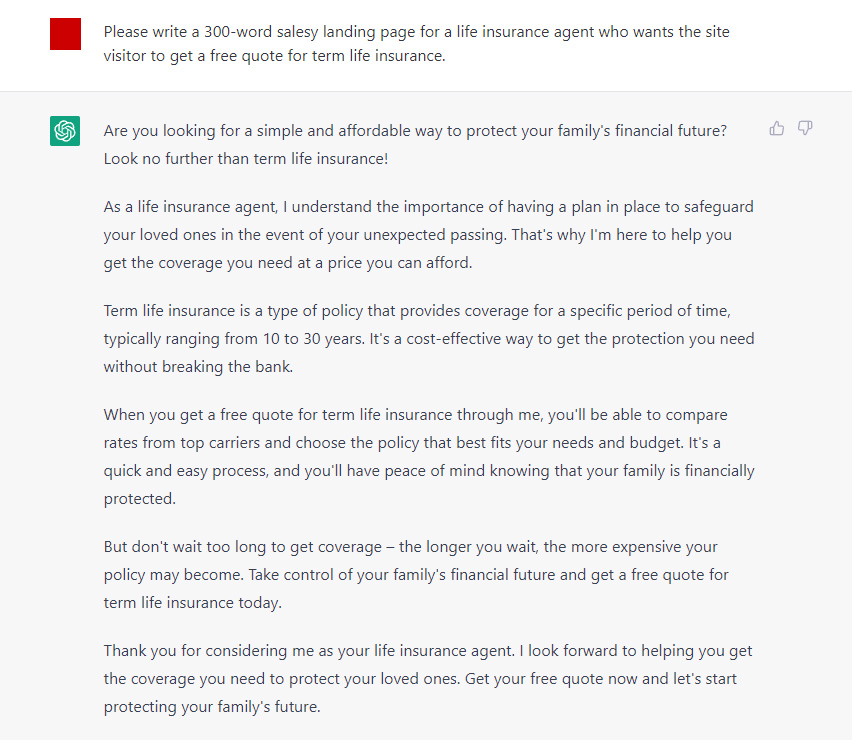

- Write text. ChatGPT is a chatbot powered by AI that can create just about any kind of content you ask for: blog posts, landing page text, recipes, cover letters, and even school essays. For example, here’s what we got when we asked it to write a 300-word landing page to get a prospect to get a free quote for term life insurance:

- Create videos. Feeling camera shy but want a “talking head” video to share with clients? You can create one with an AI avatar. Companies like Synthesia and D-ID have created realistic-looking avatars that will read your text on screen.

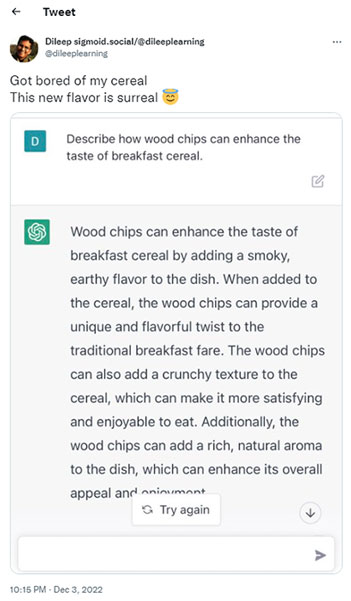

- Unethical Uses. As soon as ChatGPT was released, people found ways to have it suggest unsafe activities, do their homework, create fake resumes, create fake cited sources from fake peer-reviewed journals, and generate recipes for things like Molotov cocktails and enriched uranium. While some of these were tests to find the limits of what this particular tool can do, some people genuinely want to use AI to do bad things. But we’re in this business to help people. And there’s no “cheating” when it comes to doing the right thing for your client. We should keep an open mind about what AI can help us do for our clients, and avoid all unethical uses. As an example of how this technology can be used for nonsensical (but also possibly harmful) purposes, here’s what happened when one user asked it to describe how wood chips can enhance the taste of breakfast cereal:

- Legality of AI training material. There are lawsuits pending that claim some AI programs were trained using copyrighted material that should have been licensed. Since that material wasn’t licensed and creators weren’t asked for their consent beforehand, the lawsuits argue that the material was essentially stolen. One such lawsuit is aimed at Microsoft’s AI-assisted coding program, Copilot. Another item of concern is Lensa, a popular avatar image generator, which many artists claim was trained using their artwork without consent. We’ll have to wait and see how these lawsuits play out, but they may change the way we’re allowed to build, train, and use AI in the future.

- Proper Licensing. As tempting as it sounds to have AI spit out the content for your next marketing campaign (or a whole new website), consider this: is that use allowed by the AI’s terms and conditions of use? If you sign up to use any AI-powered service, make sure you read the terms and conditions. If it’s a freemium service, for example, commercial use is often only licensed with a paid account.

- Generic content. AI models have been trained for the most part on publicly available content – internet content, scanned public domain books, and Wikipedia. It doesn’t have access (yet) to anything that’s not on the internet. So even though it can create a blog post for you, it can’t include anything someone else hasn’t already said. Yes, it can synthesize information. But it can’t get into specifics relevant to our niche: carriers, policies, impairments, etc. All the stuff that makes insurance content niche, relevant, and helpful? It’s not coming from AI...yet.

- Duplicate content. What happens when 100 agents ask ChatGPT to write landing page copy to sell term life insurance? What happens when 1,000 agents ask for similar copy? Yes, the AI it can vary the words slightly each time. But unless specifically prompted to do otherwise, it uses the same sentence structure, the same flow, the same vocabulary every time. How is that going to impress clients? How is that going to help you stand out in a sea of sameness?

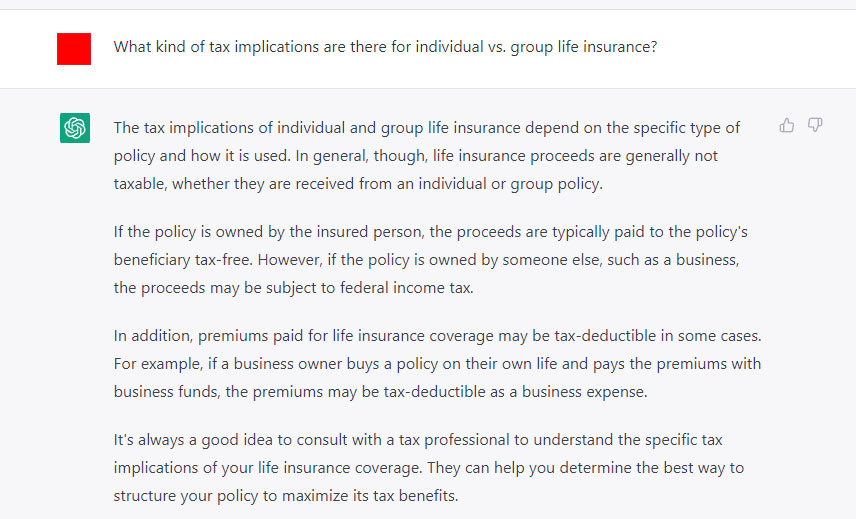

- Incorrect or incomplete content. Real people are always going to have to review the content provided by AI. There are documented cases of it providing 100% inaccurate information or simply overlooking important nuances or details. For example, we asked ChatGPT a potentially tricky question: what kind of tax implications are there for individual vs. group life insurance? The answer it gave us was true in a generic sense, but it missed a lot of nuance and focused solely on individual coverage:

A better answer would at least mention that the current face value threshold for zero tax liability in a group life insurance policy is $50,000. Other group coverage considerations include who pays for the coverage, and whether the employer is considered to “carry” that coverage. The AI answer didn’t bring up any of these specifics. - Potential Google penalties. Google’s John Mueller came right out and said it early in 2022: AI-generated content is against Google’s guidelines. Why? Because Google considers this content to be spam. And in August of 2022, the company’s Helpful Content Update was designed to penalize content that failed to provide enough information to truly help the end user. Of course, this begs the question: how does Google know what’s written by AI and what’s written by a human? This isn’t a question that can be answered just yet – but it’s something to keep in mind as we move forward in a world with so many new AI tools.

- Content that gets watermarked as AI content. This feature is already in the works. The company that produced ChatGPT, Open AI, is working on code that will watermark all AI content. It’s unclear as of yet how this will play out, but it indicates an intention to distinguish AI content from human-produced content wherever it appears.

AI Tools: Potential Pitfalls & Considerations

As amazing as some of these tools and concepts sound, we need to take a step back and think about how best to use them. Just because these tools can do certain things for us doesn’t mean we should ask them to.

Here are a few general considerations to keep in mind about AI:

AI Content: Potential Pitfalls & Considerations

Most of you out there are probably interested in how AI can help you produce content for your business: ad copy, landing page text, blog posts, client emails, social media posts, and more. Having automated help to produce all of that sounds great, doesn’t it? But here are a few considerations:

Phew! That’s a lot to think about.

And we haven’t even gotten to the fun stuff yet. But it’s important to know what a tool can’t or shouldn’t do before you start relying on what it can do. And there’s still a lot it can do, despite all these caveats! We’ll go over some specific examples in a follow-up post. Stay tuned!

That's part one of our look at AI and life insurance marketing!

What are your thoughts on AI tools? Have you used any? What was your experience like?